Reinforcement learning provides a powerful and flexible framework for automated acquisition of robotic motion skills. However, applying reinforcement learning requires a sufficiently detailed representation of the state, including the configuration of task-relevant objects. We present an approach that automates state-space construction by learning a state representation directly from camera images. Our method uses a deep spatial autoencoder to acquire a set of feature points that describe the environment for the current task, such as the positions of objects, and then learns a motion skill with these feature points using an efficient reinforcement learning method based on local linear models. The resulting controller reacts continuously to the learned feature points, allowing the robot to dynamically manipulate objects in the world with closed-loop control. We demonstrate our method with a PR2 robot on tasks that include pushing a free-standing toy block, picking up a bag of rice using a spatula, and hanging a loop of rope on a hook at various positions. In each task, our method automatically learns to track task-relevant objects and manipulate their configuration with the robot’s arm.

Etiket: 2015

Makale: Learning Deep Control Policies for Autonomous Aerial Vehicles with MPC-Guided Policy Search

Model predictive control (MPC) is an effective method for controlling robotic systems, particularly autonomous aerial vehicles such as quadcopters. However, application of MPC can be computationally demanding, and typically requires estimating the state of the system, which can be challenging in complex, unstructured environments. Reinforcement learning can in principle forego the need for explicit state estimation and acquire a policy that directly maps sensor readings to actions, but is difficult to apply to underactuated systems that are liable to fail catastrophically during training, before an effective policy has been found. We propose to combine MPC with reinforcement learning in the framework of guided policy search, where MPC is used to generate data at training time, under full state observations provided by an instrumented training environment. This data is used to train a deep neural network policy, which is allowed to access only the raw observations from the vehicle’s onboard sensors. After training, the neural network policy can successfully control the robot without knowledge of the full state, and at a fraction of the computational cost of MPC. We evaluate our method by learning obstacle avoidance policies for a simulated quadrotor, using simulated onboard sensors and no explicit state estimation at test time.

Makale: Can Deep Learning Help You Find The Perfect Match?

Is he/she my type or not? The answer to this question depends on the personal preferences of the one asking it. The individual process of obtaining a full answer may generally be difficult and time consuming, but often an approximate answer can be obtained simply by looking at a photo of the potential match. Such approximate answers based on visual cues can be produced in a fraction of a second, a phenomenon that has led to a series of recently successful dating apps in which users rate others positively or negatively using primarily a single photo. In this paper we explore using convolutional networks to create a model of an individual’s personal preferences based on rated photos. This introduced task is difficult due to the large number of variations in profile pictures and the noise in attractiveness labels. Toward this task we collect a dataset comprised of 9364 pictures and binary labels for each. We compare performance of convolutional models trained in three ways: first directly on the collected dataset, second with features transferred from a network trained to predict gender, and third with features transferred from a network trained on ImageNet. Our findings show that ImageNet features transfer best, producing a model that attains 68.1% accuracy on the test set and is moderately successful at predicting matches.

DeepDriving: Learning Affordance for Direct Perception in Autonomous Driving

Otonom araçların görüntü tabanlı olarak ilerlemesi tekniği üzerine Princeton Universitesi Vision Grubu’nun Google, Intel ve NVIDIA’nın desteğiyle yürüttüğü çalışmanın makalesi ve kodlamasının duyurusu yapıldı. Özellikle otonom araçların şerit ve araç takibini nasıl yapacağı üzerine mevcut yöntemlerle Convolution Neural Network yönteminin kıyaslamasını aşağıdaki videodadan izleyebilirsiniz.

Abstract:

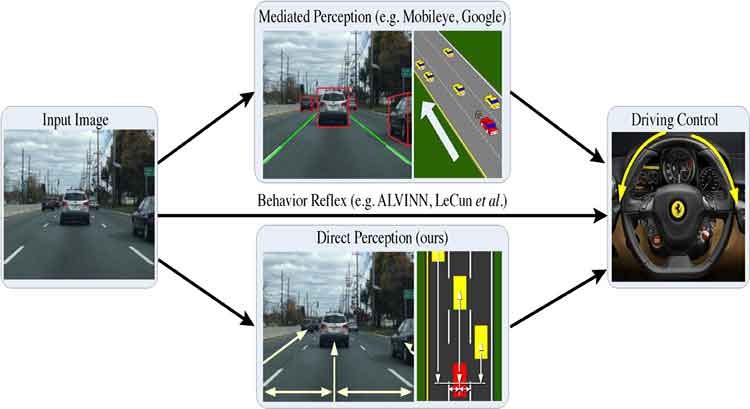

Today, there are two major paradigms for vision-based autonomous driving systems: mediated perception approaches that parse an entire scene to make a driving decision, and behavior reflex approaches that directly map an input image to a driving action by a regressor. In this paper, we propose a third paradigm: a direct perception based approach to estimate the affordance for driving. We propose to map an input image to a small number of key perception indicators that directly relate to the affordance of a road/traffic state for driving. Our representation provides a set of compact yet complete descriptions of the scene to enable a simple controller to drive autonomously. Falling in between the two extremes of mediated perception and behavior reflex, we argue that our direct perception representation provides the right level of abstraction. To demonstrate this, we train a deep Convolutional Neural Network (CNN) using 12 hours of human driving in a video game and show that our model can work well to drive a car in a very diverse set of virtual environments. Finally, we also train another CNN for car distance estimation on the KITTI dataset, results show that the direct perception approach can generalize well to real driving images.

Derin Öğrenme Yaz Okulu 2015

Derin Öğrenme Yaz Okulu Montreal/Kanada’da Ağustos 2015 ayında icra edildi. 10 günlük faaliyette derin öğrenmenin kullanım alanlarına yönelik konusunda uzman kişilerin katıldığı sunumlar ve otonom sistem demoları yapıldı. Aşağıda günlük programlar halinde sunulan sunumları indirip inceleyebilirsiniz.

| 1’inci Gün – 03 Ağustos 2015 |

|---|

| Pascal Vincent: Intro to ML |

| Yoshua Bengio: Theoretical motivations for Representation Learning & Deep Learning |

| Leon Bottou: Intro to multi-layer nets |

| 2’nci Gün – 04 Ağustos 2015 |

|---|

| Hugo Larochelle: Neural nets and backprop |

| Leon Bottou: Numerical optimization and SGD, Structured problems & reasoning |

| Hugo Larochelle: Directed Graphical Models and NADE |

| Intro to Theano |

| 3’üncü Gün – 05 Ağustos 2015 |

|---|

| Aaron Courville: Intro to undirected graphical models |

| Honglak Lee: Stacks of RBMs |

| Pascal Vincent: Denoising and contractive auto-encoders, manifold view |

| 4’üncü Gün – 06 Ağustos 2015 |

|---|

| Roland Memisevic: Visual features |

| Honglak Lee: Convolutional networks |

| Graham Taylor: Learning similarit |

| 5’inci Gün – 07 Ağustos 2015 |

|---|

| Chris Manning: NLP 101 |

| Graham Taylor: Modeling human motion, pose estimation and tracking |

| Chris Manning: NLP / Deep Learning |

| 6’ncı Gün – 08 Ağustos 2015 |

|---|

| Ruslan Salakhutdinov: Deep Boltzmann Machines |

| Adam Coates: Speech recognition with deep learning |

| Ruslan Salakhutdinov: Multi-modal models |

| 7’nci Gün – 09 Ağustos 2015 |

|---|

| Ian Goodfellow: Structure of optimization problems |

| Adam Coates: Systems issues and distributed training |

| Ian Goodfellow: Adversarial examples |

| 8’inci Gün – 10 Ağustos 2015 |

|---|

| Phil Blunsom: From language modeling to machine translation |

| Richard Socher: Recurrent neural networks |

| Phil Blunsom: Memory, Reading, and Comprehension |

| 9’uncu Gün – 11 Ağustos 2015 |

|---|

| Richard Socher: DMN for NLP |

| Mark Schmidt: Smooth, Finite, and Convex Optimization |

| Roland Memisevic: Visual Features II |

| 10’uncu Gün – 12 Ağustos 2015 |

|---|

| Mark Schmidt: Non-Smooth, Non-Finite, and Non-Convex Optimization |

| Aaron Courville: VAEs and deep generative models for vision |

| Yoshua Bengio: Generative models from auto-encoder |

Tüm sunumları indirmek için tıklayınız.

Kaynaklar: